In the previous blog I described a general framework for thinking about Bookmaker overround.

There I discussed, in the context of the two-outcome case, the choice of a functional form to describe a team's overround in terms of its true probability as assessed by the Bookmaker. As one very simple example I suggested oi = (1-pi), which we could use to model a Bookmaker who embeds overround in the price of any team by setting it to 1 minus the team's assessed probability of victory.

Whilst we could choose just about any function, including the one I've just described, for the purpose of modelling Bookmaker overround, choices that fit empirical reality are actually, I now realise, quite circumscribed. This is because of the observable fact that the total overround in any head-to-head market, T, appears to be constant, or close to it, in every game regardless of the market prices, and hence the underlying true probability assessments, of the teams involved. In other words, the total overround in the head-to-head market when 1st plays last is about the same as when 1st plays 2nd.

So, how does this constrain our choice of functional form? Well we know that T is defined as 1/m1 + 1/m2 - 1, where mi is that market price for team i, and that mi = 1/(pi(1+oi)), from which we can determine that:

If T is to remain constant across the full range of values of p1 then, we need the derivative with respect to p1 of the RHS of this equation to be zero for all values of p1. This implies that the functions chosen for o1 and o2 must satisfy the following equality:

- p1(o1' - o2') + o2' = o2 - o1 (where the dash signifies a derivative with respect to p1).

I doubt that many functional forms o1 and o2 (both of which we're assuming are functions of p1, by the way) exist that will satisfy this equation for all values of p1, especially if we also impose the seemingly reasonable constraint that o1 and o2 be of equivalent form, albeit it that o1 might be expressed in terms of p1 and o2 in terms of (1-p1), which we can think of as p2.

Two forms that do satisfy the equation, the proof of which I'll leave as an exercise for any interested reader to check, are:

- The Overround-Equalising approach : o1 = o2 = k, a constant, and

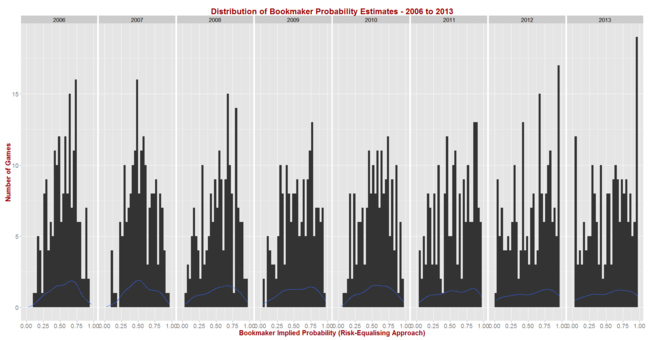

- The Risk-Equalising approach : o1 = e/p1; o2 = e/(1-p1), with e a constant

There may be another functional form that satisfies the equality above, but I can't find it. (There's a rigorous non-existence proof for you.) Certainly oi = 1 - pi, which was put forward earlier, doesn't satisfy it, and I can postulate a bunch of other plausible functional forms that similarly fail. What you find when you use these forms is that total overround changes with the value of p1.

So, if we want to choose functions for o1 and o2 that produce results consistent with the observed reality that total overround remains constant across all values of the assessed true probability of the two teams it seems that we've only three options (maybe four):

- Assume that the Bookmaker follows the Overround-Equalising approach

- Assume that the Bookmaker follows the Risk-Equalising approach

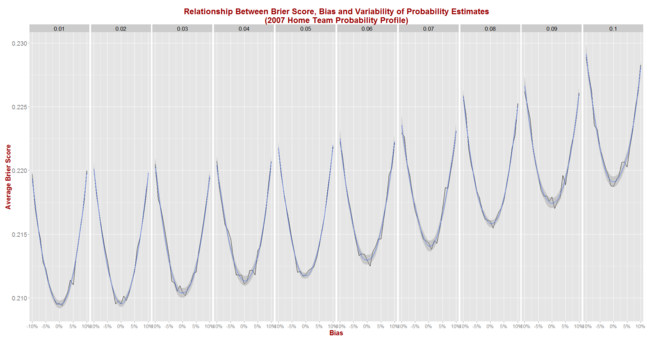

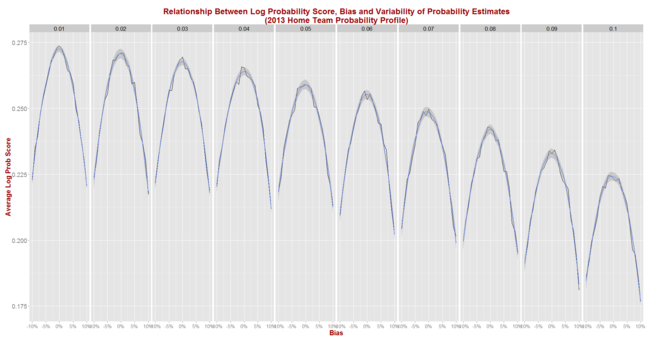

- Assume that the Bookmaker chooses one team, say the favourite or the home team, and establishes its overround using a pre-determined function relating its overround to its assessed victory probability. He then sets a price for the other team that delivers the total overround he is targetting. This is effectively the path I followed in this earlier blog where I described what's come to be called the Log Probability Score Optimising (LPSO) approach.

A fourth, largely unmodellable option would be that he simultaneously sets the market prices of both teams so that they together produce a market with the desired total overround while accounting for his assessment of the two team's victory probabilities so that a wager on either team has negative expectation. He does this, we'd assume, without employing a pre-determined functional form for the relationship between overround and probability for either team.

If these truly are the only logical options available to the Bookmaker then MAFL, it turns out, is already covering the complete range since we track the performance of a Predictor that models its probability assessments by following an Overround-Equalising approach, of another Predictor that does the same using a Risk-Equalising approach, and of a third (Bookie_LPSO) that pursues a strategy consistent with the third option above. That's serendipitously neat and tidy.

The only area for future investigation would be then to seek a solution superior to LPSO for the third approach described above. Here we could use any of the functional forms I listed in the previously blog, but could only apply them to the determination of the overround for one of the teams - say the home team or the favourite - with the price and hence overround for the remaining team determined by the need to produce a market with some pre-specified total overround.

That's enough for today though ...